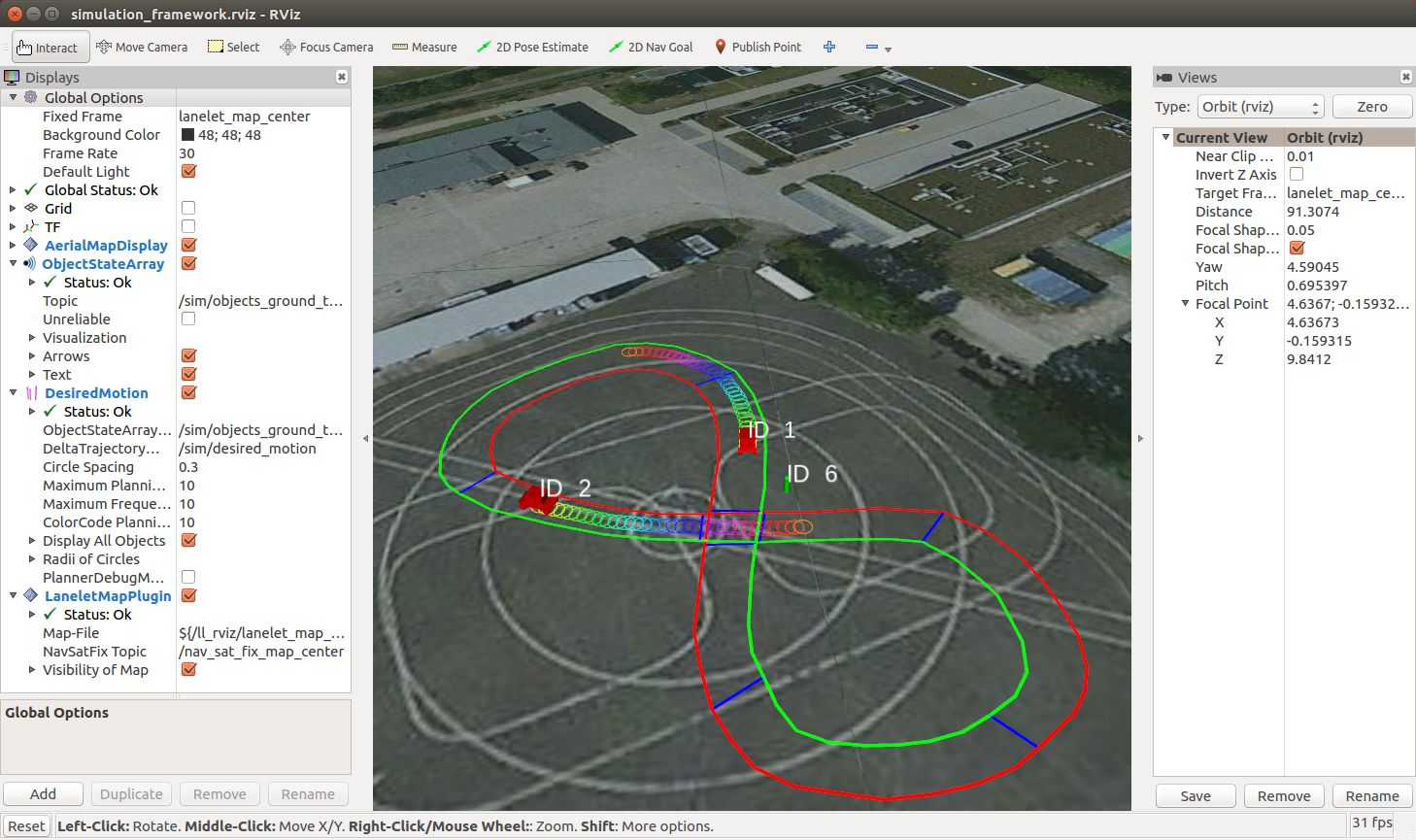

CoInCar-Sim

For cooperative motion planning, interaction between traffic participants is crucial. Consequently, simulations where other traffic participants follow simple behavioral rules can no longer be used for development and evaluation. To close this gap, we implemented a multi vehicle simulation framework. Conventional simulation agents, using a simple, rule-based behavior, are replaced by multiple instances of sophisticated behavior generation algorithms. Thus, development, test and simulative evaluation of cooperative planning approaches is facilitated. The framework is implemented using the Robot Operating System (ROS): https://github.com/coincar-sim/

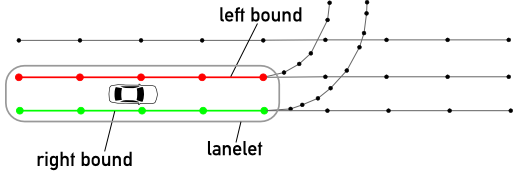

Lanelet2

Lanelet2 is a C++ library for handling map data in the context of automated driving. It is designed to utilize high-definition map data in order to efficiently handle the challenges posed to a vehicle in complex traffic scenarios. Flexibility and extensibility are some of the core principles to handle the upcoming challenges of future maps. https://github.com/fzi-forschungszentrum-informatik/Lanelet2

Quadcopter base framework and lab course: arDroneManual/Autonomous

This framework simplifies the development of autonomous flight applications for the popular Parrot AR.Drone 2.0 quadcopter. It consists of two applications: arDroneAutonomous conveniently offers undistorted camera images and other measurements, anti-windup PID position controllers, as well as keyboard and gamepad inputs. arDroneManual provides concurrent manual control and audio feedback about the quadcopter's state.

In our lab courses MTP, RVMRT and in the KSOP Optics and Photonics Lab, this framework is used to practically learn Ziegler-Nichols controller tuning and the detection of and autonomous navigation along a line on the ground. This video shows the lab's final result. A German script is already available, an English version will appear shortly. For the LaTeX sources of the scripts, please contact us.

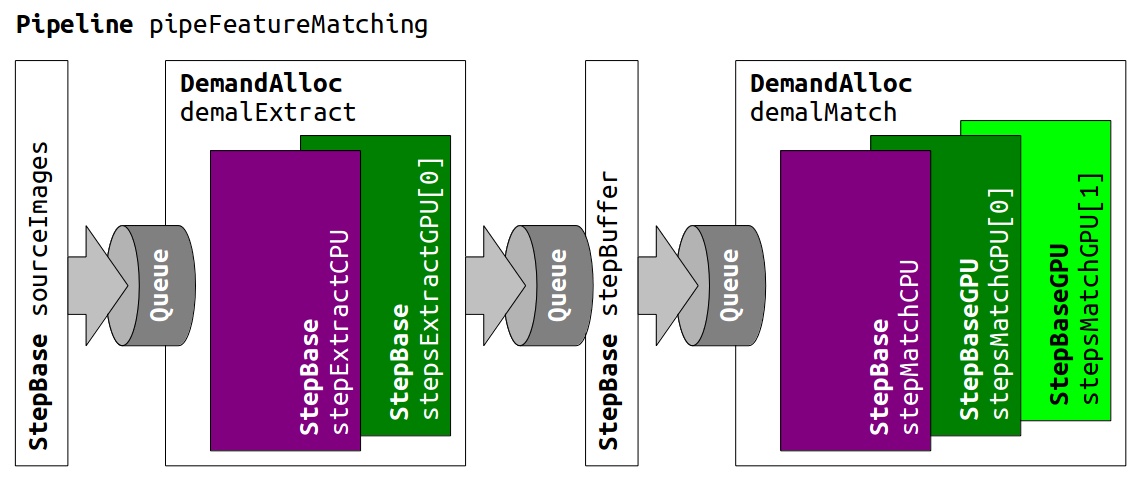

On-line Performance and Efficiency Optimization: libHawaii

Modern systems for computer vision and other real-time applications not only contain more and more CPU cores, but also graphics processing units (GPUs). For such systems, this C++ library can automatically and continuously optimize throughput and energy efficiency of user-defined stream applications. This paper (accepted version) explains the algorithms and implementation of libHawaii and demonstrates its usage on own applications. A preceding prototype for optimizing the performance of dense stereo vision in particular is described in this paper (accepted version).

Lanelet Maps: libLanelet

This library parses OpenStreetMap files and builds so-called lanelet maps. Those maps represent the environment both in topological and geometrical terms and can be used as a building block in autonomous driving applications. We provide the library as well as supplementary material on this website.

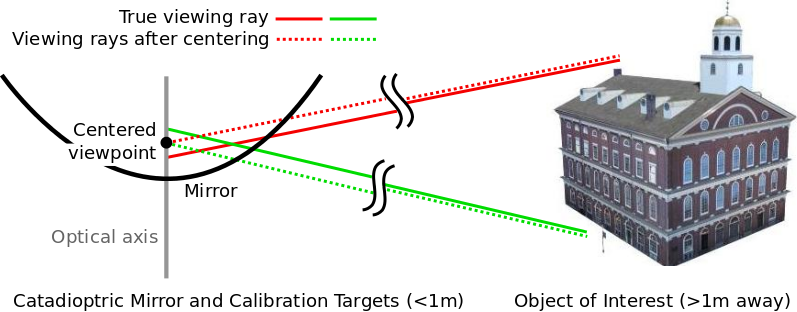

Omni Calibration Toolbox for Quasi-Central Catadioptric Cameras

This toolbox can be used to calibrate quasi-central catadioptric cameras intrinsically and extrinsically from multiple images with a planar checkerboard. An automatic corner detection and correlation is included. Furthermore, a dataset from two catadioptric cameras with calibration images and landmarks to evaluate the calibration performance is enclosed. The implementation contains state-of-the-art central projection functions and the centered projection function for slightly non central catadioptric cameras. More information is availabe on this website.

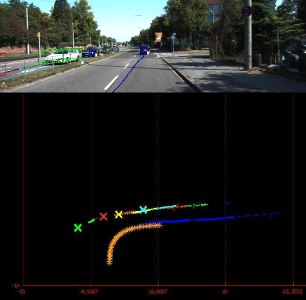

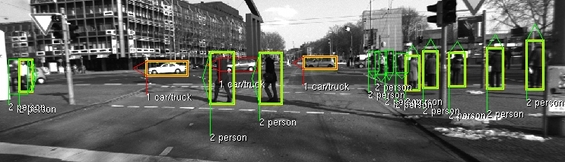

TriTrack2: MATLAB library for 3D moving object detection and tracking

TriTrack 2 is a MATLAB library for 3D moving object detection and tracking. As input, rectified stereo image pairs are used. Output are 3D bounding boxes in world coordinates. This approach is based on detecting sparse feature points and estimating scene flow using these features. Egomotion is considered using image information only. Clustering the scene flow, considering the quadratically growing stereo error, is used for moving object detection. Detections are tracked over time. The source code of triTrack2 including a readme file with installation instructions and a short demo sequence can be downloaded here.

DIRD: An illumination robust descriptor for place recognition

This website presents DIRD based place recognition. Source code and datasets are made freely available. DIRD feature vectors are extracted for every image of a given sequence. Thereafter pairs of images are automatically found that belong to the same place. The method is very robust against illumination changes.

This library can be used for loop closure detection in visual SLAM. It is written in C++, is self contained, easy to compile using CMake, runs on Linux and Windows and includes MATLAB wrappers as well as sample datasets. Moreover, the feature extraction is very fast (approx. 7ms on a single core). Place recognition is fast using SSE intrinsics. More information is availabe on this website.

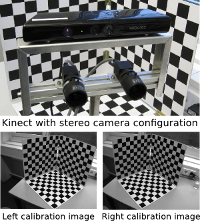

Online Toolbox for Camera and Range Sensor Calibration

This online toolbox can be used to fully automatically calibrate one or multiple video cameras intrinsically and extrinsically using a single image per sensor only using a set of planar checkerboard calibration patterns. Furthermore, if provided, it registers the point cloud of a 3D laser range finder with respect to the first camera coordinate system. The main assumption for our algorithm to work is that all cameras and the range finder have a common field of view and the checkerboard patterns can be seen in all images, cover most parts of the images and are presented at various distances and orientations. More information is availabe on this website.

LIBVISO2: Library for Visual Odometry

LIBVISO2 is a very fast cross-platfrom (Linux, Windows) C++ library with MATLAB wrappers for computing the 6 DOF motion of a moving mono/stereo camera rig. The stereo version is based on minimizing the reprojection errors of sparse feature matches and runs in real-time (>20fps on i7 at VGA resolution). No motion model or setup restrictions are imposed, except that the input images must be rectified and calibration parameters are known. The monocular version uses the 8-point algorithm for fundamental matrix estimation. For estimating the scale it assumes that the camera is moving at a known and fixed height over ground. It includes a simple structure-from-motion pipeline to reconstruct sparse 3D point clouds. The source code of libviso2 including a readme file containing installation instructions can be downloaded here. More information is availabe on this website.

LIBELAS: Library for Efficient Large-scale Stereo Matching

LIBELAS is a cross-platfrom (Linux, Windows) C++ library with MATLAB wrappers for computing disparity maps from rectified graylevel stereo pairs. It is robust against moderate changes in illumination and well suited for robotics applications with high resolution images. Computing the left and right disparity map of a one Megapixel image requires less than one second on a single i7 CPU core. A sub-sampling option allows for computing disparity maps at half image but full depth resolution at 10 fps. The source code of libelas including a readme file containing installation instructions can be downloaded here. More information is availabe on this website.

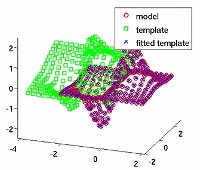

LIBICP: Library for Iterative Closest Point Fitting

LIBICP is a cross-platfrom C++ library with MATLAB wrappers for fitting 2d or 3d point clouds with respect to each other. Currently it implements the SVD-based point-to-point algorithm as well as the linearized point-to-plane algorithm. It also supports outlier rejection and is accelerated by the use of kd trees as well as a coarse matching stage using only a subset of all points. The source code of libicp including a readme file containing installation instructions can be downloaded here. More information is availabe on this website.

License Information

This software is free to use for research and non-commercial projects. When downloading software from this website, you accept our license agreements:

All software and source code on this website is copyright by the respective authors and available under the GNU General Public License V3, which means that if you distribute a software which uses our software, you have to distribute it under GPL with source code.

Another option is to contact us in order to purchase a commercial license.

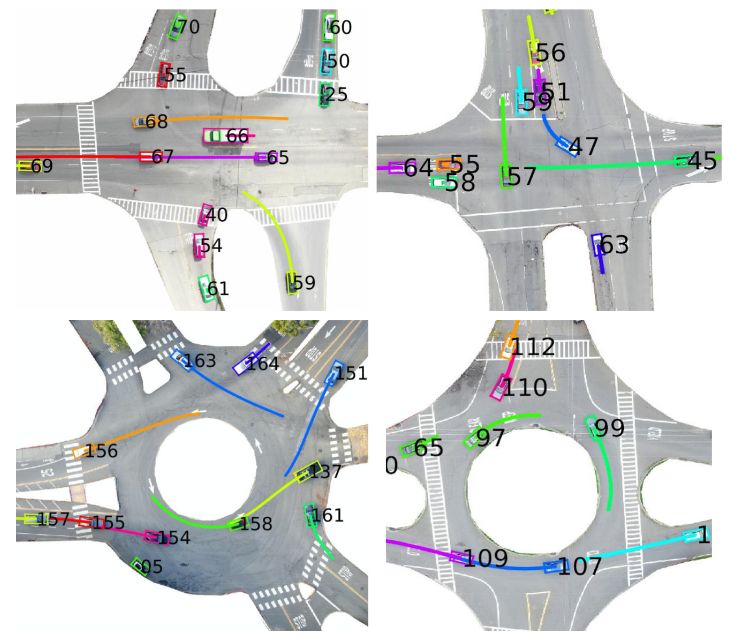

INTERACTION Dataset

The INTERACTION dataset contains naturalistic motions of various traffic participants in a variety of highly interactive driving scenarios from different countries. The dataset can serve for many behavior-related research areas, such as

- intention/behavior/motion prediction,

- behavior cloning and imitation learning,

- behavior analysis and modeling,

- motion pattern and representation learning,

- interactive behavior extraction and categorization,

- social and human-like behavior generation,

- decision-making and planning algorithm development and verification,

- driving scenario/case generation, etc.

To download the dataset, visit: https://interaction-dataset.com/

For python tools to work with the dataset, visit: https://github.com/interaction-dataset/interaction-dataset

The KITTI Vision Benchmark Suite

This dataset takes advantage of our autonomous driving platform Annieway. We present challenging real-world benchmarks for evaluating tasks such as stereo, optical flow, visual odometry, 3D object detection and 3D tracking. For this purpose, we equipped a standard station wagon with two high-resolution color and grayscale video cameras, using a baseline of roughly 54 centimeters. Accurate ground truth information is provided by a Velodyne laser scanner and a GPS localization system with integrated inertial measurement unit and RTK corrections. All sensors have been calibrated and synchronized. Our datsets are captured by driving around in a mid-size city, rural areas and on highways. Up to 15 cars and 30 pedestrians are visible per image. Besides providing all data in raw format, we extract benchmarks for each task. For each of our benchmarks, we also provide an evaluation metric and an online evaluation website.

Karlsruhe Object Dataset 2011: Cars and Pedestrians

This dataset contains contains roughly 1000 images each and object bounding boxes for cars or pedestrians (~10000 bounding boxes in total). Furthermore, it contains the orientation of each object, discretized into 8 classes for cars and 4 classes for pedestrians. The .zip files provided below come with a MATLAB-based label viewer. Labels are saved as MATLAB matrices (.mat files), where each row is an object in the image and the columns correspond to position, size, class and orientation of the object. You can use this dataset to train your preferred objected detector. We had very good experiences with the cascaded part-based L-SVM from Ross Girshick and Pedro Felzenszwalb, which we modifed to fix the latent orientations to the ones given by the labels. We have been able to detect very small objects by upsampling the original images up to a factor of three. You can download the datasets here.

Karlsruhe Stereo Video Sequences

This dataset contains a couple of high-quality stereo sequences recorded from a moving vehicle driving through the city of Karlsruhe. The sequences are saved as rectified images in *.png format. Calibration parameters of the camera and ground truth odometry from an OXTS RT 3000 GPS/IMU system is provided in a separate text file. You can download the datasets here.

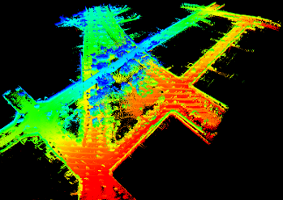

Karlsruhe Velodyne SLAM Dataset (incl Stereo Video)

This dataset contains sensor recordings from our vehicle AnnieWAY at a bridge in the city of Karlsruhe. Driving a loop that passes both below and over the bridge makes it a very special scenario specifially suited for 3D SLAM applications. The used sensors are:

- 3D Lidar Scanner: Velodyne HDL64E-S2

- Stereo Camera, calibrated

- GPS/IMU data: OXTS RT 3000

You can download the datasets here

Specialized Cyclist Dataset

This dataset is focused on cyclist detection using a monocular RGB camera in various scenarios experienced on roads in Autumn and Winter (with snow) for enabling researchers to run rigorous tests in various conditions. In both training and testing sets, there are 62297 total images, about 18200 cyclists instances, and 30 different cyclists. Images in 1242x375 (KITTI res.) and 1920x1080 Full HD resolutions.

We utilized the popular KITTI dataset label format so that researchers could reuse their existing test scripts. We encourage researchers to augment their test and validation datasets with extra cyclist instances in the same label and image formats.

We hope that our Specialized Cyclist Dataset will accelerate progress in cyclist detection and improve cyclist safety around autonomous vehicles.

Dataset prepared in collaboration with WINKAM Lab, KIT, Intel, and Specialized Bicycles.

Here you can find the paper with a detailed description of the dataset spec.

LINK TBD

You can download the training set here:

https://drive.google.com/drive/u/0/folders/1inawrX9NVcchDQZepnBeJY4i9aAI5mg9

License Information

The datasets on this webpage are free to use for research and non-commercial projects. When downloading the datasets from this website, you accept our license agreements:

All datasets on this website are copyright by the respective authors and available under the Creative Commons Attribution-NonCommercial-ShareAlike 3.0 License:

TriTrack 2 is a MATLAB library for 3D moving object detection and tracking. As input, rectified stereo image pairs are used. Output are 3D bounding boxes in world coordinates. This approach is based on detecting sparse feature points and estimating scene flow using these features. Egomotion is considered using image information only. Clustering the scene flow, considering the quadratically growing stereo error, is used for moving object detection. Detections are tracked over time. The source code of triTrack2 including a readme file with installation instructions and a short demo sequence can be downloaded

TriTrack 2 is a MATLAB library for 3D moving object detection and tracking. As input, rectified stereo image pairs are used. Output are 3D bounding boxes in world coordinates. This approach is based on detecting sparse feature points and estimating scene flow using these features. Egomotion is considered using image information only. Clustering the scene flow, considering the quadratically growing stereo error, is used for moving object detection. Detections are tracked over time. The source code of triTrack2 including a readme file with installation instructions and a short demo sequence can be downloaded

This online toolbox can be used to fully automatically calibrate one or multiple video cameras intrinsically and extrinsically using a single image per sensor only using a set of planar checkerboard calibration patterns. Furthermore, if provided, it registers the point cloud of a 3D laser range finder with respect to the first camera coordinate system. The main assumption for our algorithm to work is that all cameras and the range finder have a common field of view and the checkerboard patterns can be seen in all images, cover most parts of the images and are presented at various distances and orientations. More information is availabe on

This online toolbox can be used to fully automatically calibrate one or multiple video cameras intrinsically and extrinsically using a single image per sensor only using a set of planar checkerboard calibration patterns. Furthermore, if provided, it registers the point cloud of a 3D laser range finder with respect to the first camera coordinate system. The main assumption for our algorithm to work is that all cameras and the range finder have a common field of view and the checkerboard patterns can be seen in all images, cover most parts of the images and are presented at various distances and orientations. More information is availabe on  LIBVISO2 is a very fast cross-platfrom (Linux, Windows) C++ library with MATLAB wrappers for computing the 6 DOF motion of a moving mono/stereo camera rig. The stereo version is based on minimizing the reprojection errors of sparse feature matches and runs in real-time (>20fps on i7 at VGA resolution). No motion model or setup restrictions are imposed, except that the input images must be rectified and calibration parameters are known. The monocular version uses the 8-point algorithm for fundamental matrix estimation. For estimating the scale it assumes that the camera is moving at a known and fixed height over ground. It includes a simple structure-from-motion pipeline to reconstruct sparse 3D point clouds. The source code of libviso2 including a readme file containing installation instructions can be downloaded

LIBVISO2 is a very fast cross-platfrom (Linux, Windows) C++ library with MATLAB wrappers for computing the 6 DOF motion of a moving mono/stereo camera rig. The stereo version is based on minimizing the reprojection errors of sparse feature matches and runs in real-time (>20fps on i7 at VGA resolution). No motion model or setup restrictions are imposed, except that the input images must be rectified and calibration parameters are known. The monocular version uses the 8-point algorithm for fundamental matrix estimation. For estimating the scale it assumes that the camera is moving at a known and fixed height over ground. It includes a simple structure-from-motion pipeline to reconstruct sparse 3D point clouds. The source code of libviso2 including a readme file containing installation instructions can be downloaded  LIBELAS is a cross-platfrom (Linux, Windows) C++ library with MATLAB wrappers for computing disparity maps from rectified graylevel stereo pairs. It is robust against moderate changes in illumination and well suited for robotics applications with high resolution images. Computing the left and right disparity map of a one Megapixel image requires less than one second on a single i7 CPU core. A sub-sampling option allows for computing disparity maps at half image but full depth resolution at 10 fps. The source code of libelas including a readme file containing installation instructions can be downloaded

LIBELAS is a cross-platfrom (Linux, Windows) C++ library with MATLAB wrappers for computing disparity maps from rectified graylevel stereo pairs. It is robust against moderate changes in illumination and well suited for robotics applications with high resolution images. Computing the left and right disparity map of a one Megapixel image requires less than one second on a single i7 CPU core. A sub-sampling option allows for computing disparity maps at half image but full depth resolution at 10 fps. The source code of libelas including a readme file containing installation instructions can be downloaded  LIBICP is a cross-platfrom C++ library with MATLAB wrappers for fitting 2d or 3d point clouds with respect to each other. Currently it implements the SVD-based point-to-point algorithm as well as the linearized point-to-plane algorithm. It also supports outlier rejection and is accelerated by the use of kd trees as well as a coarse matching stage using only a subset of all points. The source code of libicp including a readme file containing installation instructions can be downloaded

LIBICP is a cross-platfrom C++ library with MATLAB wrappers for fitting 2d or 3d point clouds with respect to each other. Currently it implements the SVD-based point-to-point algorithm as well as the linearized point-to-plane algorithm. It also supports outlier rejection and is accelerated by the use of kd trees as well as a coarse matching stage using only a subset of all points. The source code of libicp including a readme file containing installation instructions can be downloaded

This dataset contains a couple of high-quality stereo sequences recorded from a moving vehicle driving through the city of Karlsruhe. The sequences are saved as rectified images in *.png format. Calibration parameters of the camera and ground truth odometry from an OXTS RT 3000 GPS/IMU system is provided in a separate text file. You can download the datasets

This dataset contains a couple of high-quality stereo sequences recorded from a moving vehicle driving through the city of Karlsruhe. The sequences are saved as rectified images in *.png format. Calibration parameters of the camera and ground truth odometry from an OXTS RT 3000 GPS/IMU system is provided in a separate text file. You can download the datasets  This dataset contains sensor recordings from our vehicle

This dataset contains sensor recordings from our vehicle