Perception and Scene Understanding in Railway Transport

Environmental perception and scene understanding are an essential component for developing driver assistance systems and automating railway transport. Perception systems rely on data collected by sensors such as cameras, RADAR and LiDAR, which can be mounted on trains or strategically positioned alongside railway tracks. Advanced algorithms from pattern recognition and machine learning analyze this sensor data to detect pedestrians, trains, and obstacles, recognize signals, and assess track conditions. By integrating and processing this information, scene understanding algorithms enable real-time decision-making, thus improving the safety, efficiency, and reliability of railway operations.

Research Topics:

Rail-Bench: A Benchmark Suite for Environmental Perception

Autonomous driving cars have seen significant advancements in recent years, largely due to the availability of diverse and challenging benchmark suites for environmental perception. Autonomous driving trains, however, are still in the early stages of development, hindered by a lack of suitable benchmarks.

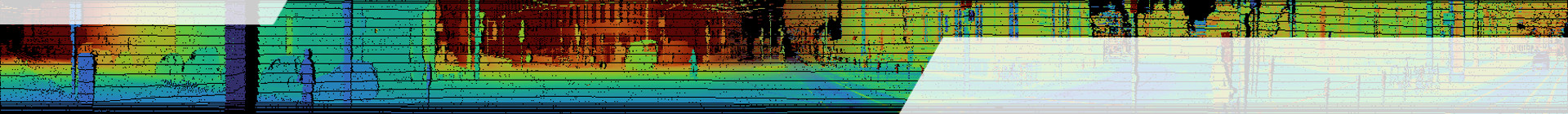

To accelerate progress in environmental perception within the railway domain, we are developing a comprehensive vision benchmark suite. This benchmark will address various rail traffic scenarios and include tasks for rail track detection, object detection, vegetation detection, object tracking, and ego-motion estimation.

The core of our benchmark suite will be an annotated training and test dataset, accessible through a public website. Models submitted to our platform will be evaluated automatically using task-specific and standardized metrics. Based on the achieved scores, submitted models can be ranked to provide an overview of recent advancements in the research field.

Contact: M.Sc. Annika Bätz

Our project is funded by the innovation initiative mFUND by the Federal Ministry for Digital and Transport

and supported by DB InfraGO, Digitale Schiene Deutschland, and German Centre for Rail Traffic Research.